Security

For security matters, ansible has a feature called ansible vault to store sensite data.

Seen that ansible is an infra as code technology, you need to store the code into a content management sevrice such as CSV, SVN, GIT, TFS, …

So to not allow anyone to read sensite data, use ansible vault

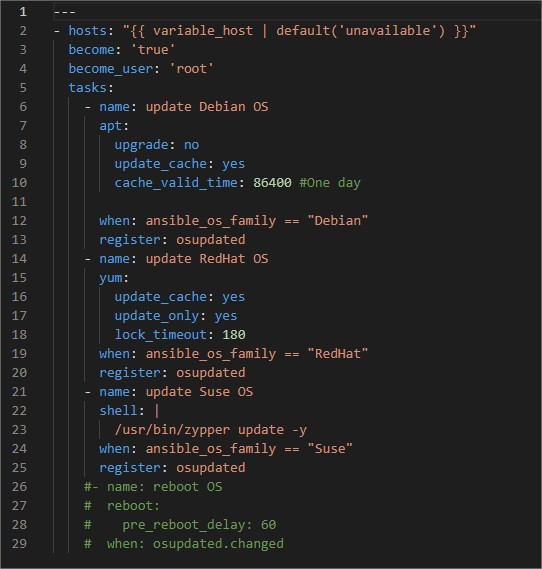

Secure the content of playbooks.

- Create and Keep sensitive data encrypted with AES:

- Run the command line ansible-vault create secret-info.yml

- Enter twice a vault password

- Enter your sensitive data with the text editor

- Run the command line ansible-vault create secret-info.yml

- Edit the vault:

- ansible-vault edit secret-info.yml

- Edit your sensitive data with the text editor

- Use the vault:

- Add vars_files into your playbook

- vars_files:

- – secret-info.yml

- ansible-playbook playbook.yml –ask-vault-pass

- It will prompt the vault password

- If you try to automate the runs, it could be a good idea to request the password from a secured tool such as Hashicorp vault.

- Add vars_files into your playbook